Containers and Kubernetes are best with Tuxera Fusion

We are here to help

Have a question or need guidance? Whether you’re searching for resources or want to connect with an expert, we’ve got you covered. Use the search bar on the right to find what you need.

Tuxera SMB (now called Fusion File Share by Tuxera) is a high-performance alternative for open-source Samba and other SMB/CIFS servers. It has state-of-the-art modular architecture that runs in user or kernel space. This enables Fusion File Share to achieve maximum I/O throughput, low latency, and ensures the lowest CPU usage and memory footprint compared to other solutions. It supports the Server Message Block (SMB) protocol’s latest features, including SMB 2, SMB 3, and all related security features. One of the key benefits of Fusion File Share is its possibility to automatically failover and recover a connection. If a server node fails, terminates, or is shutdown without any prior indication, the SMB client detects the node as unavailable once a time-out or keep-alive mechanism is encountered. This aspect of connection recovery is unreliable and slow. Fusion File Share reconnects using the TCP “tickle ACK” mechanism. This sends an ACK with invalid fields which triggers a set of TCP exchanges, causing the client to promptly recognize a stale connection and then reconnect. To test recovery, Fusion File Share is wrapped inside a Docker container and deployed to a Kubernetes cluster. Kubernetes is a portable, extensible open-source platform for managing containerized workloads and services that facilitates both declarative configuration and automation.1 The platform is self-healing by nature.2 This means Kubernetes can restart containers that fail, replaces and reschedules containers when nodes die, kills containers that don’t respond to user-defined health checks, and doesn’t advertise them to clients until they are ready to serve. With this, it’s fairly easy to test Fusion File Share connection failover and recovery with Fusion File Share running as a container inside a Kubernetes cluster.

A Windows client connects to the Fusion File Share mount, which is running inside the Kubernetes cluster. Then, a file copy is initiated to the mount to ensure continuous connection. While the file copies, the container running Fusion File Share is killed explicitly. This causes Kubernetes to reschedule Fusion File Share into another Kubernetes node and within a few seconds, the copy resumes to the Fusion File Share mount.

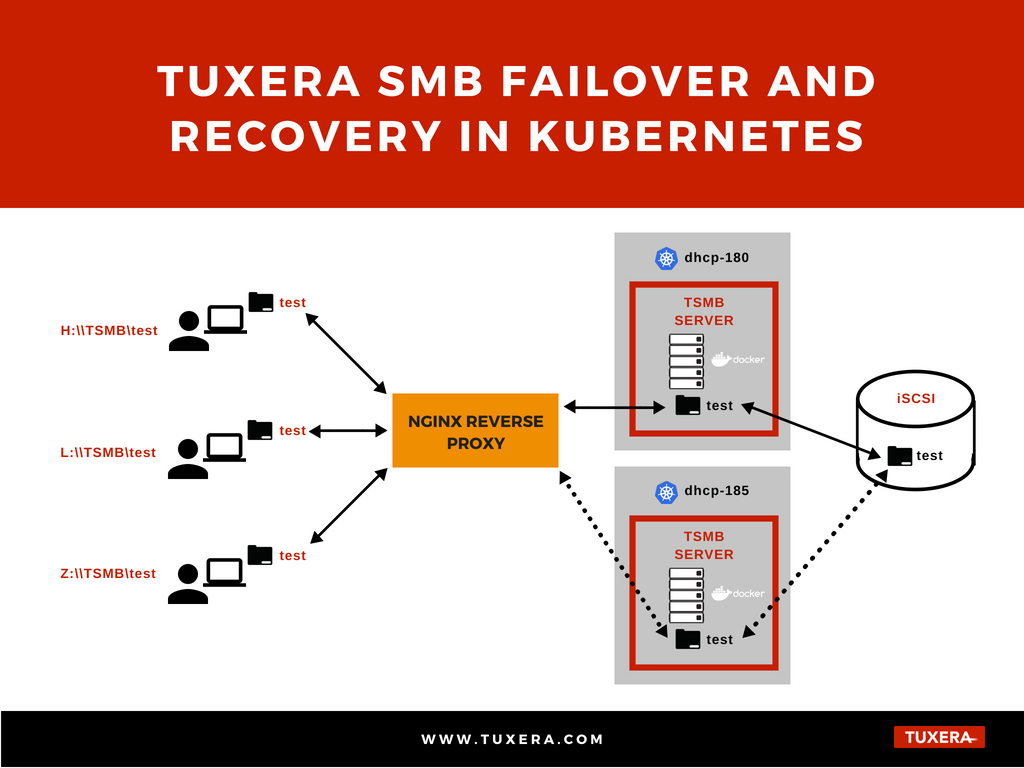

Figure 1 shows the test setup. Three different users have Windows 10 desktop acting as SMB clients. They have mounted a shared folder named “test” to different drives on their machines with path \tsharetest. The folder is served by Fusion File Share running inside a 2-node Kubernetes cluster. This is an on-premise Kubernetes cluster containing dhcp-180 and dhcp-185 nodes. From the figure, Fusion File Share is served from host dhcp-180. Also, these clusters use iSCSI storage from an external iSCSI initiator. An NGINX reverse proxy runs between the users and Kubernetes cluster. This is because as of Kubernetes 1.9.x, it’s impossible to natively obtain a load-balanced external IP or ingress for an on-premise Kubernetes cluster.3

Figure 1 shows the test setup. Three different users have Windows 10 desktop acting as SMB clients. They have mounted a shared folder named “test” to different drives on their machines with path \tsharetest. The folder is served by Fusion File Share running inside a 2-node Kubernetes cluster. This is an on-premise Kubernetes cluster containing dhcp-180 and dhcp-185 nodes. From the figure, Fusion File Share is served from host dhcp-180. Also, these clusters use iSCSI storage from an external iSCSI initiator. An NGINX reverse proxy runs between the users and Kubernetes cluster. This is because as of Kubernetes 1.9.x, it’s impossible to natively obtain a load-balanced external IP or ingress for an on-premise Kubernetes cluster.3

To demonstrate Fusion File Share’s failover and recovery, a file is copied from the user’s local disk on a Windows computer to the mount point \tsharetest. As soon as copying is initiated, the operation starts with a speed based on the network speed. Then, to force a failure, the pod, tsmb-server which runs the Fusion File Share container, is deleted during the copy operation. Kubernetes understands the container was deleted and hence starts the Fusion File Share server container in another server. The end-user would see the copy speed going down to zero for a very short while before the copy resumes instantly. The key point to note here is that copying does not fail, or get interrupted, by any errors. This gives a seamless experience to the user, who would not even notice the server failure on node dhcp-180 and subsequent recovery on the dhcp-185 node. Fusion File Share SMB server ensures the client waits until the new instance of SMB server is started, performs the reconnection and resumes the file copy. The explanation is demonstrated in the video clip below.

Fusion File Share leverages the self-healing feature of Kubernetes to transfer Fusion File Share SMB server container from one host to another when a failure occurs giving users a seamless experience during file copy. As we are already aware that a good end-user’s experience is the key to success, this test demonstrates one of Fusion File Share SMB’s reliability features: to automatically recover in case of failure without interrupting the user’s needs. The end goal of such a feature is to provide the best user experience, and here we have created a way to test that we can deliver on that promise. Also, in recent times, many organizations are containerizing their infrastructure. Thus, Fusion File Share by Tuxera would also be a great fit for those customers running Kubernetes on their premises. This allows users to reap the combined features and benefits of Kubernetes and Fusion File Share.

Suggested content for: